Many forms of data can be anonymised by removing the personal data.

For example, a survey might be anonymised by removing information such as the names, dates of birth and addresses of respondents.

Mobility data, individuals’ locations over time at an individual granularity, are more difficult to anonymise, however, as they are themselves personal data.

Human movement patterns are highly unique (i.e. each person’s movement patterns are different from anyone else’s) and highly regular (i.e. people maintain similar movement patterns over time, such as commutes), like a fingerprint, creating the potential for people to be identified from their location data. In a GPS dataset, which has much greater temporal and spatial resolution than CDR data, just 4 data points were sufficient to reidentify 95% of individuals when paired with individuals’ social media data.

By removing directly identifying information, such as phone numbers, from CDR data (pseudonymisation) and aggregating the data for many individuals both spatially and temporally we can minimise the risk of individuals being reidentified. Combined with some additional protections we can be confident that the data is anonymised and that individual subscribers will not be reidentified.

Threats to individual privacy

In recent years, researchers from different disciplines have developed algorithms, methodologies, and frameworks to attempt to exploit the attributes of mobility data, particularly the uniqueness and regularity of human mobility, in order to assess the risk such data poses to individual privacy and how individuals can be protected from re-identification.

Whether an adversary (a malicious entity attempting to exploit a dataset to gain personal information about an individual(s)) is attacking individual location data (e.g. individual trajectories or points of interest (PoIs)), or data mobility aggregates, affects the methods an adversary may use and the likelihood of successfully re-identifying target individual.

It is much easier to exploit individual location data which has not been aggregated and a broad range of methods have been developed that allow an adversary to “link” individual location data to other information on a target (e.g. from social media) to reidentify this person even if the data has been pseudonymised.

These methods have three broad categories:

- Record linkage attacks or linkage attacks, which aim to map records in the individual or resampled location data to side information owned by the adversary.

- Homogeneity attacks or attribute linkage attacks, which aim to link the adversary’s side information with sensitive attributes (rather than specific records) in the target individual location data.

- Inference attacks or probabilistic attacks, which aim to increase the adversary’s knowledge by inferring new information about a target’s attributes or movements from the dataset, including likely locations or PoIs of the target.

It is more difficult to reidentify individual subscribers from mobility aggregates.

First the adversary must attempt to infer the trajectories or PoIs of individual subscribers, and even then the information retrieved may not be sufficient to reidentify the target individual(s). Furthermore, the relatively low temporal and spatial resolution of CDR data, in comparison to GPS data, makes this particularly challenging, especially in Low- and Middle-Income Countries (LMICs).

Two groups of privacy researchers have demonstrated the individual trajectories may be inferred from CDR-derived mobility aggregates. However, their methodologies make important assumptions, such as that every subscriber is recorded in every time step and the data has high spatial and temporal resolution, which are currently unlikely to be true for many CDR datasets, especially those from LMICs.

It is therefore important to ensure that individual location data is secure as this is the data with the greatest risk of reidentification of individual subscribers.

However, care should also be taken when handling mobility aggregates. Greater aggregation (i.e. grouped by larger areas and time periods) makes it more difficult to infer individual trajectories but also needs to be balanced against the level of detail required by the end-user. Furthermore, the individual privacy of subscribers in a mobility aggregate should be further protected using other methodologies, such as k-anonymity.

It is also important to continue to monitor the development of new type of attack and to ensure that the individual privacy preserving mechanisms remain sufficient to protect the privacy of subscribers.

We should also be aware that while the increased ownership and use of mobile devices can improve the spatial and temporal resolution, and therefore quality, of CDR data in LMICs, it may also increase the risk of reidentification and the threat to individual privacy.

Pseudonymisation

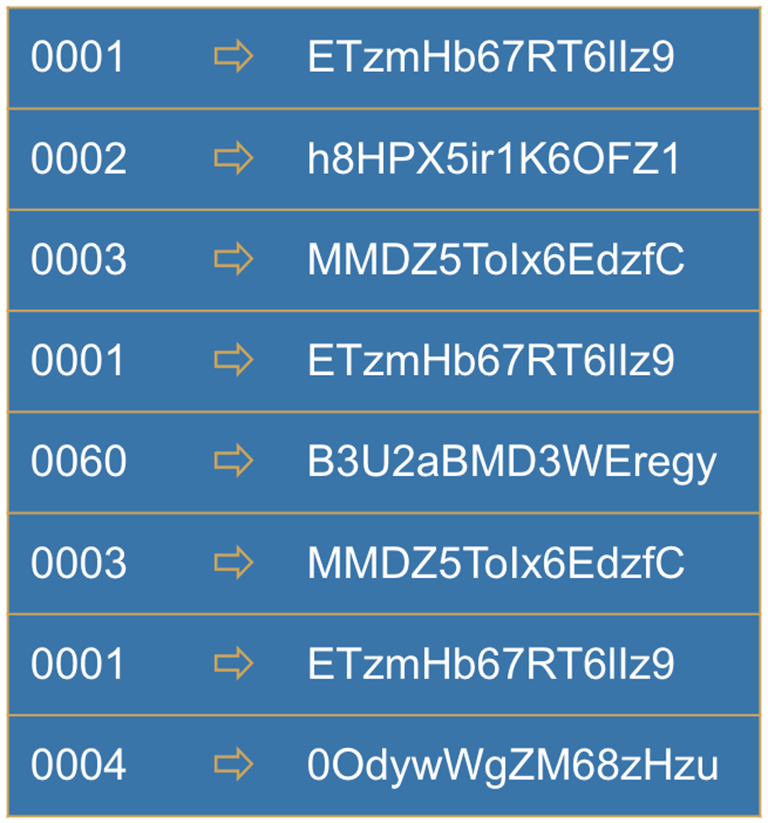

Pseudonymising data involves replacing directly identifying information with randomly-generated values, while ensuring identical values remain identical so that related information remains linked.

For example, we might replace each individual’s telephone number with a string of random characters. This would prevent subscribers being directly reidentified by anyone who knew the telephone number of a given subscriber directly identifying them from the CDR dataset.

While pseudonymisation prevents the direct reidentification of individuals from the dataset, this only obscures individuals’ identities.

The uniqueness and regularity of human mobility means individuals may be reidentified from their location data even if other identifying information is removed and further steps are necessary to anonymise the data.

Aggregation

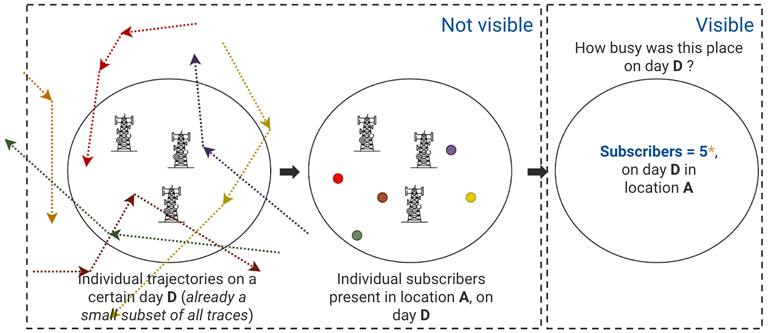

By combining or aggregating the CDR data for many subscribers we can make inferences about the distribution and mobility of the population as a whole while protecting their individual privacy.

CDR data is aggregated spatially (e.g. by district or region) and temporally (e.g. by day, month), depending on the type of indicator being produced and the requirements of the end-user. In addition to helping protect the individual privacy of the subscribers, the spatial aggregation of CDR data removes sensitive information about the number and locations of cell towers in each area which may be a concern of other stakeholders such as the MNO.

However, aggregation may not be sufficient to protect the individual privacy of all subscribers. Aggregation relies on there being a sufficient number of subscribers in each area in each time frame to prevent any individual being reidentified. Without any further checks, only aggregating CDR data risks producing outputs in which there is only a single or very few subscribers in a given location at a given time which may risk their reidentification.

In order to sufficiently prevent the reidentification of any individuals, it is therefore necessary to implement additional anonymisation methods.

Additional anonymisation methods

Pseudonymisation and aggregation may not be sufficient to prevent the reidentification of all individual subscribers from CDR data.

To better ensure that the individual privacy of subscribers is preserved, we can use additional anonymisation methods such as k-anonymity. A dataset can be described as k-anonymised if each subset of data points for a given individual (i.e. their location at each time point) is shared by at least k-1 other subscribers. If set k to 15, the standard used by Flowminder, this means that the aggregated CDR must have at least 16 subscribers associated with (present or resident in) each location at each time point. Any combinations of location and time associated with fewer than 15 subscribers are redacted from the data set.

While ensuring k-anonymity with a threshold of 15 is currently sufficient to preserve the individual privacy of subscribers in a CDR dataset, anonymisation is a moving target as new methods for reindentification and for data protection continue to be developed.

For example, extensions of k-anonymity such as historical k-anonymity and L-diversity have been proposed to provide further protection, particularly for GPS data which is more vulnerable to reidenification attacks due to the greater spatial and temporal resolution. Privacy researchers have also proposed using neural networks to generate synthetic mobility datasets. These are based on real datasets and maintain the same aggregated statistical properties and patterns, but are not generated directly from the mobility data of real subscribers meaning no subscribers can be reidentified. However, these techniques are still being developed and are not currently necessary for the anonymisation of CDR data.